LLMs 32. Large Language Models (LLMs): Reinforcement Learning — PPO Section

Quick Overview

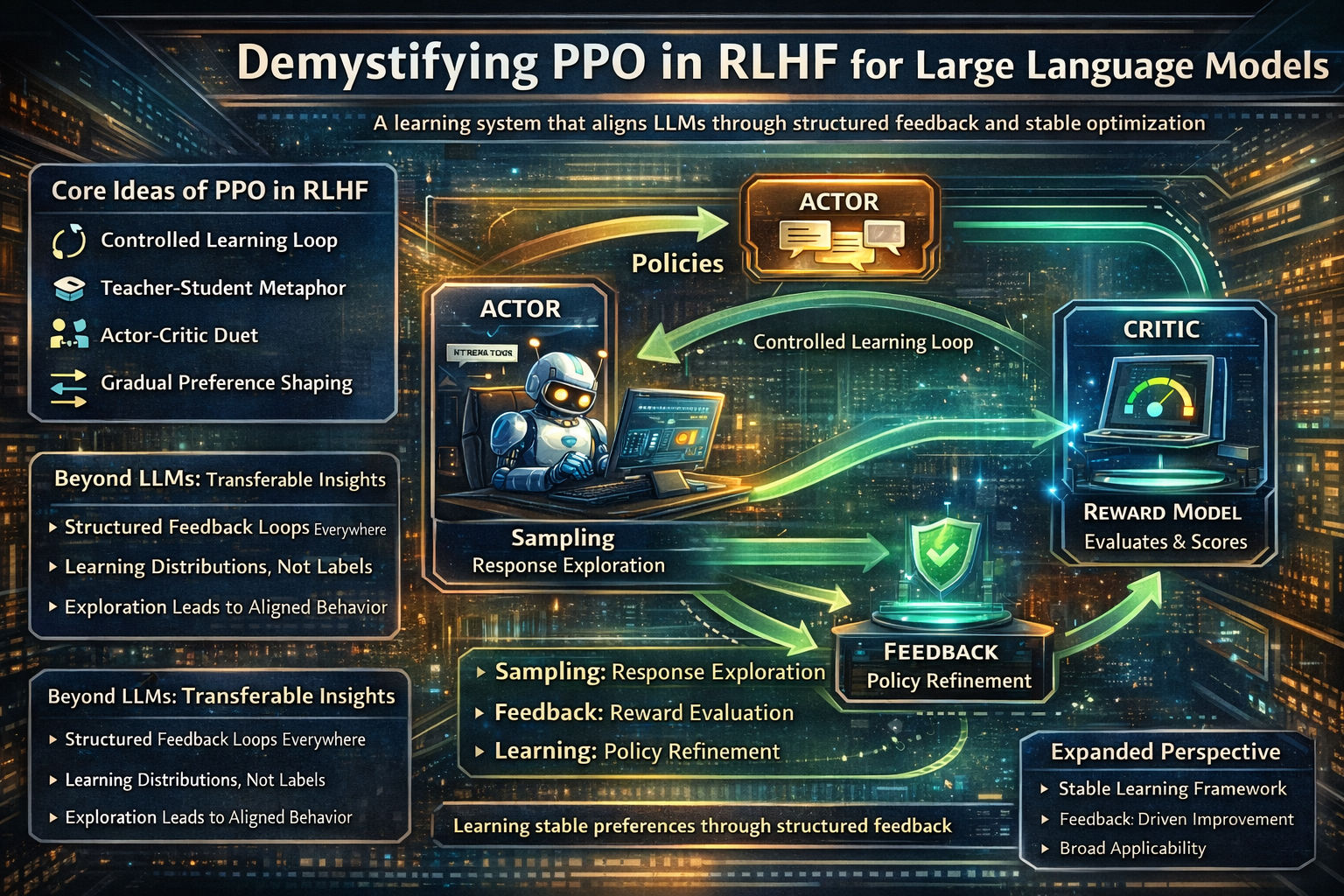

This learning-oriented guide explains Proximal Policy Optimization (PPO) within Reinforcement Learning from Human Feedback (RLHF), covering the PPO training loop, actor–critic roles, sampling as exploration, reward modeling, feedback loops, and stability versus efficiency trade-offs.

PPO in RLHF for Large Language Models: A Learning-Oriented Guide

This post is designed to help you understand PPO in RLHF not just as an algorithm, but as a learning system. Instead of focusing on formulas, we focus on roles, signals, and feedback loops—the same ideas that appear across reinforcement learning, control systems, and even human education.

1. Why PPO Is Used in RLHF

Large language models are first trained to imitate data. That makes them fluent, but not necessarily aligned with human preferences. RLHF exists to close this gap.

PPO (Proximal Policy Optimization) is used because it offers a practical balance between learning efficiency and stability. It allows the model to improve based on rewards, while preventing updates that are so large they destabilize behavior.

At a high level, PPO answers one question:

How can we improve a model’s behavior without letting it change too fast?

2. RLHF as a Teacher–Student System

A helpful way to understand RLHF is to view it as a structured learning loop:

- The model acts like a student attempting answers.

- The reward model acts like a teacher evaluating quality.

- PPO plays the role of a curriculum designer, ensuring learning steps are gradual.

The student is allowed to explore different answers, but feedback continuously nudges it toward preferred behavior. Over time, this transforms raw language ability into aligned behavior.

This framing is useful because it generalizes beyond LLMs. Similar loops appear in robotics, recommendation systems, and game-playing agents.

3. The PPO Training Loop Explained

PPO in RLHF can be understood as a repeating cycle:

- The model samples responses to prompts.

- A reward model evaluates those responses.

- The policy is updated using PPO to favor higher-reward outputs.

What matters is not a single response, but the distribution of responses the model learns to produce.

This is why PPO operates on probabilities rather than hard decisions—it reshapes tendencies, not fixed answers.

4. Actor–Critic: Two Roles, One Policy

PPO relies on an actor–critic structure.

The actor is the language model itself. It decides what token to generate next by producing a probability distribution.

The critic estimates how good that decision was, in terms of expected reward. It does not generate language; it evaluates it.

This separation mirrors many real systems:

- Decision vs evaluation in economics

- Action vs feedback in motor control

- Hypothesis vs validation in science

Understanding this duality makes PPO easier to reason about.

5. What “Sampling” Really Means in PPO

Sampling is not just data generation—it is exploration.

By allowing the model to produce varied responses, PPO ensures the model does not collapse too early into safe but suboptimal behavior. This exploration is what allows new, better strategies to emerge.

The reward model then shapes which explorations are reinforced and which are discouraged.

This idea connects directly to:

- Exploration–exploitation tradeoffs

- Curriculum learning

- Diversity control in generation systems

6. How Rewards Shape Model Behavior

Rewards in PPO are continuous signals, not binary labels. A response is not simply right or wrong—it is better or worse relative to alternatives.

This allows fine-grained preference shaping:

- Politeness

- Helpfulness

- Safety

- Conciseness

Importantly, rewards do not guarantee truth. They encode preferences, not facts. This explains both the power and the limitations of RLHF.

7. Transferable Insights Beyond LLMs

Understanding PPO in RLHF gives you tools that apply far beyond language models:

- Any system with feedback can be framed as reinforcement learning

- Stable optimization often requires constrained updates

- Separating decision-making from evaluation improves robustness

- Learning distributions matters more than learning single outputs

These principles appear in robotics, recommendation engines, autonomous agents, and even organizational decision systems.

Final Perspective

PPO is not just an optimization algorithm—it is a controlled learning philosophy. It assumes models will make mistakes, but that with structured feedback and stable updates, those mistakes can be shaped into aligned behavior.

Once you internalize this loop, RLHF stops feeling mysterious and starts looking like a natural extension of how learning systems—human or artificial—actually improve.

Comments (0)