LLMs 36. Large Language Models (LLMs) — Reinforcement Learning

Quick Overview

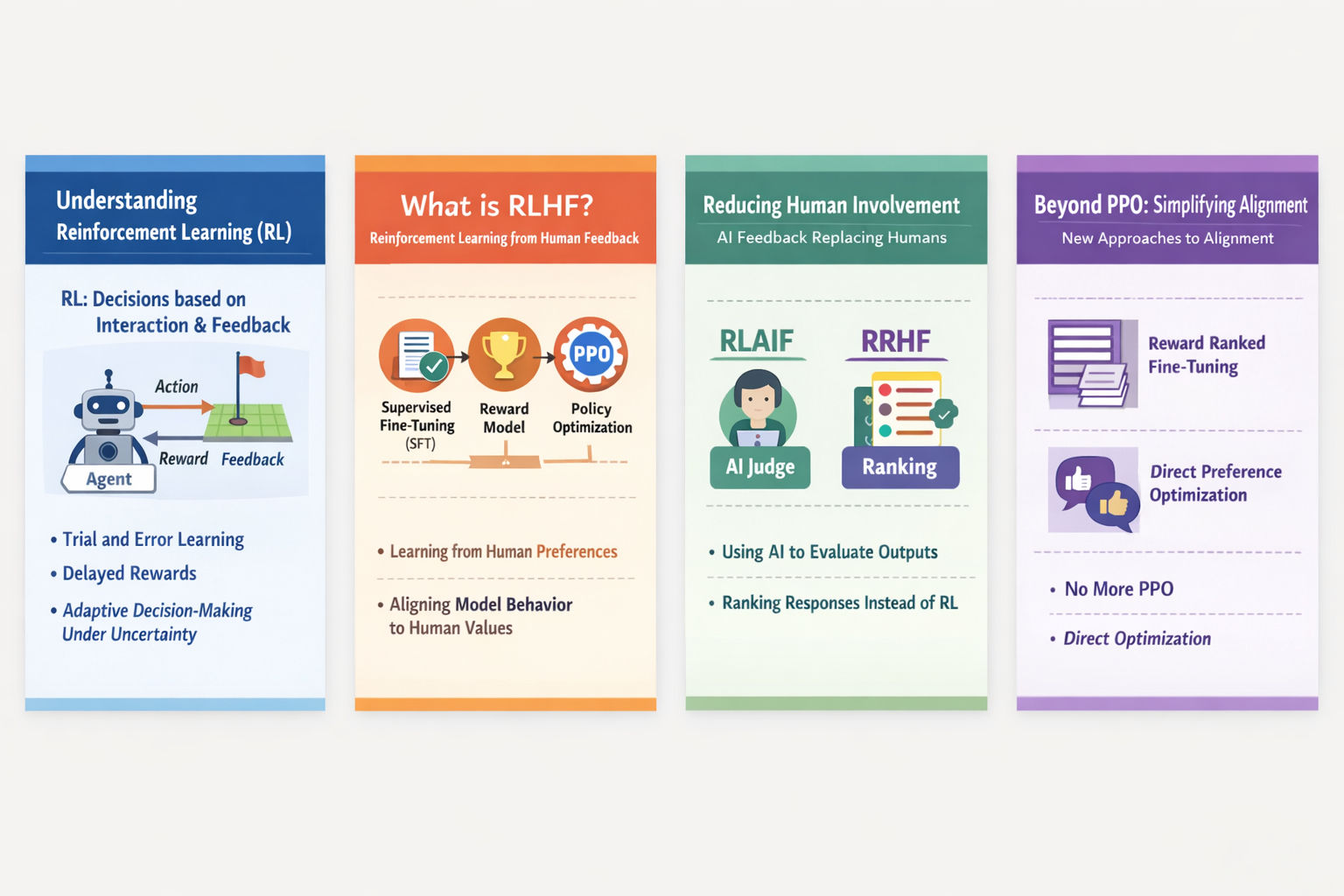

This guide covers reinforcement learning concepts applied to large language models, including RLHF, supervised fine-tuning, reward model training, policy optimization (PPO), practical limitations, and emerging alternatives in alignment.

Reinforcement Learning, RLHF, and the Evolution of Alignment Methods

A Learning-Oriented Resource for Understanding How Large Models Are Aligned

This post is written to help you build intuition, not just memorize terminology. The focus is on how reinforcement learning ideas are adapted to large language models, why RLHF emerged, where it breaks down in practice, and how newer methods simplify or replace it. Think of this as a map of how alignment thinking has evolved.

1. What Is Reinforcement Learning?

Reinforcement Learning (RL) is a learning paradigm centered on interaction and feedback. An agent takes actions in an environment, receives reward signals, and gradually learns a policy that maximizes long-term cumulative reward.

What distinguishes RL from supervised learning is that:

- feedback is often delayed,

- the correct action is not explicitly labeled,

- learning is driven by trial, error, and evaluation.

At its core, RL is about adaptive decision-making under uncertainty. This framing becomes crucial when we apply RL concepts to language models, which do not act in physical environments but instead interact through text.

2. What Is RLHF and Why It Matters

RLHF—Reinforcement Learning from Human Feedback—adapts RL to language models by redefining the “environment” as human preference.

Instead of physical rewards, the model learns from signals such as:

- which response humans prefer,

- which answer is more helpful,

- which output feels safer or more truthful.

The standard RLHF pipeline consists of three stages:

- Supervised Fine-Tuning (SFT) to teach basic instruction-following behavior

- Reward Model (RM) training to approximate human preference judgments

- Policy optimization (often PPO) using the reward model as feedback

This framework played a key role in making GPT-3–era models more aligned with human expectations. Importantly, RLHF does not primarily teach new knowledge—it reshapes how existing knowledge is expressed.

3. Do the Reward Model and Base Model Need to Be the Same?

In theory, the reward model and the policy model can be different. In practice, many implementations impose constraints.

When both models share:

- the same tokenizer,

- the same vocabulary,

- and similar architectural assumptions,

training becomes simpler and more stable. This is why PPO-based RLHF pipelines often select reward models from the same family as the base model.

This reflects a deeper principle: alignment is easier when representations are compatible. Mismatched tokenization or embedding spaces can introduce subtle failure modes.

4. The Practical Limitations of RLHF

Despite its success, RLHF has significant real-world costs.

Human preference data is expensive to collect, slow to iterate on, and difficult to scale. Each comparison requires time, judgment, and consistency.

The training pipeline itself is long. Running SFT, then RM training, then PPO means slow experimentation cycles and high operational complexity.

Compute cost is another bottleneck. PPO-based RLHF often involves:

- a policy model,

- a reference model,

- a reward model,

- and inference-time sampling models.

This can mean four large models active simultaneously, pushing memory and compute requirements beyond what many teams can afford.

5. Reducing Human Cost: AI Replacing Humans

One major direction of innovation focuses on replacing human feedback with model-generated feedback.

RLAIF: Reinforcement Learning from AI Feedback

RLAIF uses AI models as proxy annotators. Instead of humans judging outputs, another model evaluates and corrects responses.

During early stages, one model generates samples while another critiques them. These critiques are then used to fine-tune the base model. During later RL stages, an AI-trained reward model replaces human judgment entirely.

This approach dramatically improves scalability, though it introduces a new risk: feedback bias amplification, where models reinforce each other’s mistakes.

RRHF: Rank Response from Human Feedback

RRHF takes a different path. It removes reinforcement learning altogether.

Multiple candidate responses are generated, often by different models. Humans rank these responses by preference. A ranking-based loss is then used directly to fine-tune the model.

Interestingly, a model trained this way can function both as:

- a generation model,

- and a preference or reward model.

RRHF highlights an important insight: ranking is often enough. Explicit reward modeling is not always necessary.

6. Shortening the Pipeline: Data-Centric Alignment

Another major shift is moving away from complex training pipelines toward data quality optimization.

The core assumption is simple but powerful:

If the data is good enough, the model will align itself.

LIMA: Less Is More for Alignment

LIMA argues that most reasoning and knowledge are learned during pretraining. Alignment is primarily about reshaping output distribution, not teaching new skills.

By carefully curating a small, high-quality dataset, LIMA shows that supervised fine-tuning alone can achieve strong alignment—without RLHF.

This reframes alignment as a data selection problem, not an optimization problem.

“Only 0.5% Data Is Needed”

This idea pushes the same logic further. Instead of more data, focus on the most informative samples.

By identifying high-impact examples, models can achieve strong performance with a tiny fraction of the original dataset. This dramatically reduces training cost while preserving or even improving quality.

The broader lesson: not all data is equally valuable.

7. Reducing PPO Cost: Simplifying Training Objectives

A third direction focuses on eliminating PPO itself.

RAFT: Reward Ranked Fine-Tuning

RAFT combines reward supervision with ranking-based fine-tuning. Instead of running a full RL loop, it directly optimizes model outputs using ranked signals.

This preserves preference learning while avoiding PPO’s instability and compute overhead.

DPO: Direct Preference Optimization

DPO represents a more radical simplification.

It introduces a direct objective that:

- uses pairwise preference comparisons,

- removes the need for a separate reward model,

- eliminates PPO entirely.

DPO reframes alignment as a pure optimization problem, not a reinforcement learning one. This greatly simplifies engineering while maintaining strong empirical performance.

Final Perspective

The evolution from RLHF to RLAIF, LIMA, RAFT, and DPO reflects a broader trend:

Alignment is moving from heavy reinforcement learning toward simpler, more data- and objective-driven methods.

The key insight is that large language models already possess vast capabilities. Alignment is less about teaching them how to think and more about shaping how they respond.

Understanding this shift will help you reason about future alignment techniques—many of which may not look like reinforcement learning at all.

Comments (0)