1.1 What Is an ML System Design Interview and Why It Matters

What Is an ML System Design Interview?

If you have been preparing for tech interviews, you have probably encountered coding interviews, behavioral interviews, and maybe even traditional system design interviews. But there is a special breed of interview that has become increasingly common at top tech companies: the ML system design interview.

In this lesson, we will break down exactly what these interviews are, why companies love them, and what separates candidates who crush them from candidates who stumble through them. By the end, you will know what to expect, what interviewers are really evaluating, and how to avoid the traps that sink most candidates.

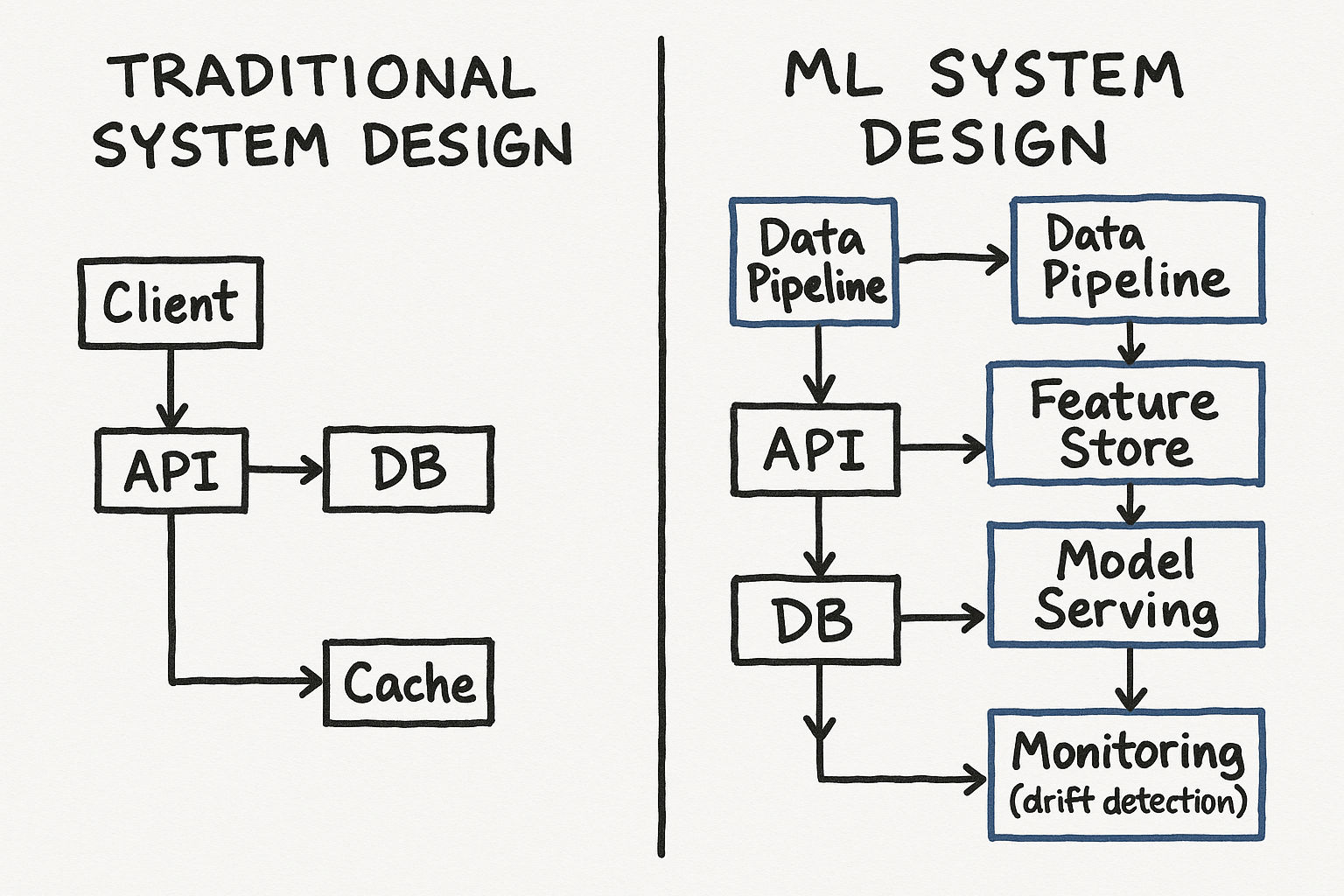

How ML System Design Differs from Regular System Design

In a traditional system design interview, you might be asked to design a URL shortener or design a chat application. The focus is on distributed systems fundamentals -- databases, caching, load balancing, message queues, and API design.

An ML system design interview flips the script. You will be asked something like:

Design a news feed ranking system

Design a fraud detection system for payments

Design a recommendation engine for an e-commerce platform

Design a content moderation system for a social network

The key difference? The core of the system is a machine learning model, and you need to think end-to-end about how data flows in, how models get trained, how predictions get served, and how the whole thing gets monitored in production.

Here is a detailed comparison that you should internalize:

| Aspect | Traditional System Design | ML System Design | ML Coding Interview |

|---|---|---|---|

| Core challenge | Scalability and reliability | ML problem framing and model integration | Algorithm implementation correctness |

| Data discussion | Storage and retrieval | Collection, labeling, features, drift | Given dataset, focus on processing |

| Evaluation criteria | Latency, throughput, availability | Offline metrics + online business metrics | Code correctness, time/space complexity |

| Iteration style | Version upgrades | Model retraining, A/B testing | Optimize solution on the spot |

| Ambiguity level | Moderate | Very high -- no single right answer | Low -- clear problem statement |

| Drawing diagrams | Architecture diagrams | ML pipeline diagrams | Rarely needed |

| Duration | 45-60 minutes | 45-60 minutes (sometimes 90) | 45-60 minutes |

| Who drives | Candidate leads | Candidate leads | Interviewer guides |

| Typical seniority | All levels | Mid to senior (L4+) | All levels |

Understanding these differences is critical because many candidates prepare for one type and walk into another. If you treat an ML system design interview like a traditional system design interview, you will spend too much time on infrastructure and not enough on the ML reasoning. If you treat it like a coding interview, you will try to implement algorithms instead of designing systems.

Interview tip: Do not treat ML system design as system design plus sprinkle some ML on top. The ML reasoning IS the interview. System components are supporting cast.

Why Companies Ask These Questions

Companies do not ask ML system design questions to test whether you can recite the architecture of a transformer. They ask because they want to know: can you think like an ML engineer who ships real products?

Here is what they are really testing:

1. Problem framing ability. Can you take a vague business problem and turn it into a well-defined ML task? This is arguably the hardest and most valuable skill. At Meta, an interviewer might say improve the News Feed and expect you to decide whether that means predicting clicks, predicting meaningful social interactions, or predicting time well spent. Each framing leads to a completely different system.

2. Data thinking. Do you instinctively ask about data availability, quality, and labeling? Or do you jump straight to model architecture? Engineers who think about data first are the ones who succeed in production ML. An experienced ML engineer at Google once shared that 80 percent of their team time goes into data pipelines and feature engineering, and only 20 percent into model architecture. Your interview should reflect that reality.

3. Model selection with justification. They do not care if you pick XGBoost or a neural network. They care about why you picked it and what trade-offs you considered. Saying I would use a transformer because it is state of the art is weak. Saying I would start with logistic regression because our features are mostly tabular with known interactions, and the interpretability helps us debug issues in the first version, then move to gradient boosted trees if we need to capture non-linear interactions -- that is strong.

4. System integration awareness. Can the model actually be served at the required latency? How does it fit into the existing product? What happens when the model is wrong? At Amazon, if a product recommendation model fails, showing popular items is a reasonable fallback. At a self-driving car company, there is no such fallback. Your awareness of failure modes shows maturity.

5. Trade-off reasoning. Every ML system involves trade-offs -- precision vs. recall, latency vs. accuracy, complexity vs. maintainability. Can you articulate these clearly? For example, in a content moderation system at TikTok, high recall (catching most harmful content) is more important than high precision (some false positives are acceptable), but at a news platform, high precision matters more because incorrectly flagging legitimate news damages trust.

6. Communication and collaboration. The interview format tests whether you can explain technical decisions to a colleague. Can you draw a clear diagram? Can you respond to pushback without getting defensive? Can you incorporate feedback from the interviewer gracefully? These soft skills are evaluated throughout.

What This Interview Is NOT

Let us clear up some confusion. ML system design is not:

An ML coding interview -- You will not implement backpropagation or write a training loop. Those are separate interviews that test your ability to code ML algorithms from scratch. In an ML coding interview at Google, you might implement k-means clustering or write a gradient descent optimizer. That is a different skill set.

A traditional system design interview -- You will not spend 40 minutes drawing load balancers and database sharding strategies. Infrastructure matters, but it is not the star of the show. If you find yourself spending more than 5 minutes on database choices, you have gone off track.

An ML trivia quiz -- Nobody is going to ask you to derive the gradient of cross-entropy loss. This is about applied thinking, not textbook knowledge. You should know what cross-entropy loss is and when to use it, but deriving it from scratch is for a different interview.

A Kaggle competition -- Competition ML optimizes a fixed metric on a fixed dataset. Production ML deals with messy data, changing requirements, and real users. Kaggle teaches you model tuning. ML system design teaches you to build systems that work in the real world.

A research paper presentation -- You are not expected to propose novel architectures. Interviewers want proven, practical approaches. Using a well-understood model like gradient boosted trees with solid justification is better than proposing a custom neural architecture you read about last week.

Companies That Ask ML System Design Questions

Pretty much every major tech company with significant ML products now includes some form of ML system design interview. Here is a more detailed breakdown of what each company tends to focus on:

Meta -- Heavy emphasis on ranking and recommendation systems (News Feed, Ads, Reels, People You May Know). Interviewers often ask about engagement prediction, content integrity, and ad relevance. Example questions: Design a system to rank Stories for a user. Design a model to detect misinformation.

Google -- Broad ML system design across Search, YouTube, Ads, and Cloud AI. Google interviews tend to be more open-ended and expect strong fundamentals. Example questions: Design YouTube video recommendation system. Design a smart compose feature for Gmail.

Amazon -- Product recommendations, Alexa, fraud detection, supply chain optimization. Amazon values customer obsession, so framing everything in terms of customer impact resonates. Example questions: Design a product recommendation system. Design a system to predict delivery times.

Netflix -- Recommendation systems, content optimization, streaming quality prediction. Netflix interviews go deep on personalization and A/B testing culture. Example question: Design the homepage personalization system for Netflix.

LinkedIn -- Feed ranking, job recommendations, People You May Know, InMail targeting. LinkedIn problems often involve two-sided marketplaces (job seekers and recruiters). Example question: Design a system to recommend jobs to users.

Apple -- Siri, on-device ML, privacy-preserving ML systems. Apple uniquely emphasizes on-device inference and differential privacy. Example question: Design an on-device keyboard prediction system.

TikTok / ByteDance -- Content ranking, creator recommendations, content moderation. The For You page is one of the most sophisticated recommendation systems in the world. Example question: Design the For You Page ranking system.

Uber / Lyft -- ETA prediction, surge pricing, fraud detection, driver-rider matching. Real-time and geospatial ML is common. Example question: Design a system to predict ride ETAs.

Airbnb -- Search ranking, pricing suggestions, trust and safety, guest-host matching. Example question: Design a dynamic pricing suggestion system for hosts.

Twitter / X -- Timeline ranking, ad targeting, content moderation, trend detection. Example question: Design a system to rank tweets in the home timeline.

Spotify -- Music recommendation, podcast discovery, playlist generation. Example question: Design Discover Weekly.

Pinterest -- Visual search, pin recommendation, ad targeting. Example question: Design a visual search system for finding similar products.

These interviews are especially common for roles titled ML Engineer, Applied Scientist, Research Engineer, and increasingly for senior Software Engineer roles at ML-heavy companies.

Interview tip: Research the specific company ML products before your interview. If you are interviewing at Netflix, think about recommendation systems. At Uber, think about real-time prediction systems. Tailoring your mental models to the company domain gives you a huge edge. Read their engineering blog -- Meta Engineering Blog, Netflix Tech Blog, and Uber Engineering Blog all publish detailed posts about their ML systems.

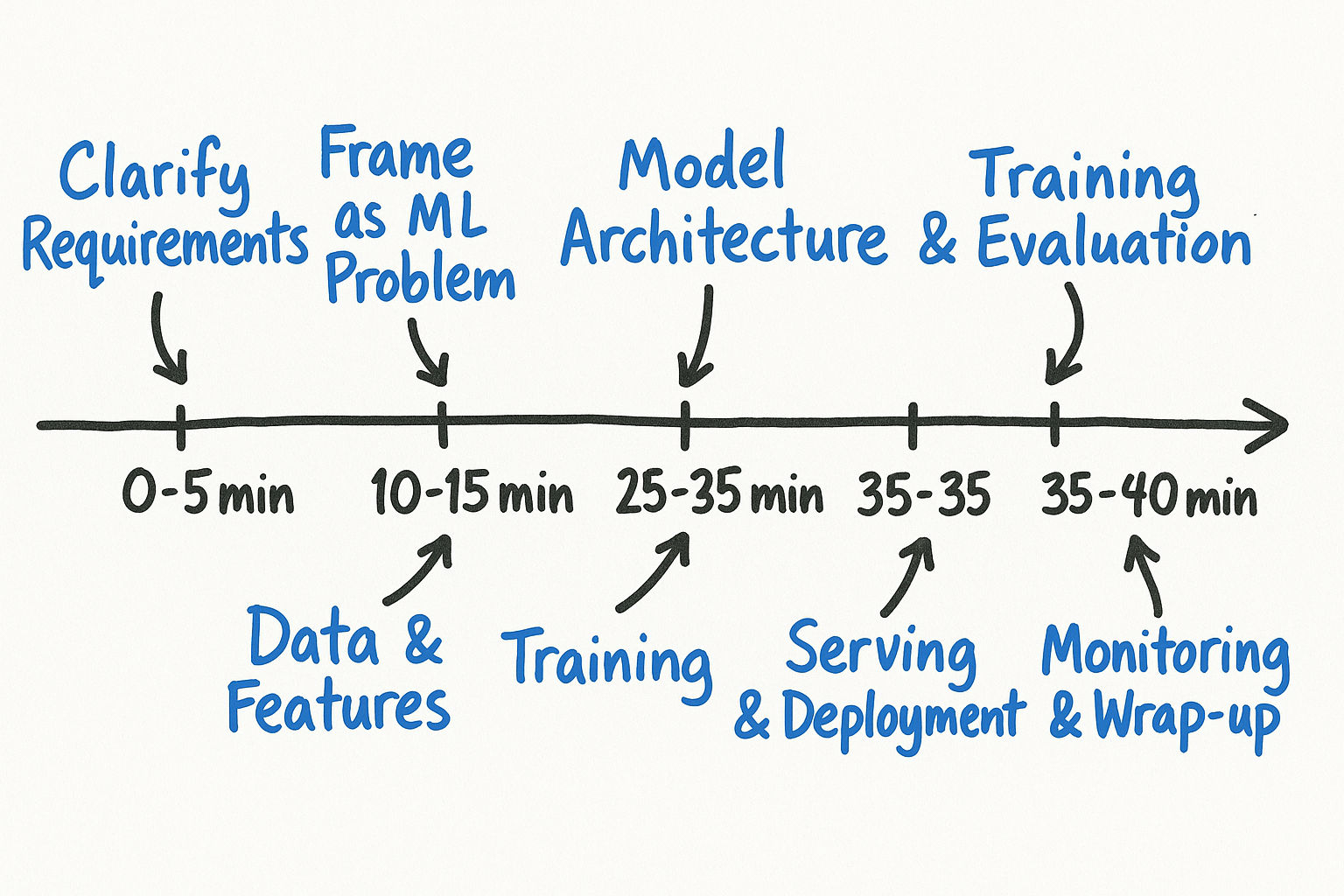

The Typical Interview Format: A Minute-by-Minute Walkthrough

Here is what you can expect in a typical 45 to 60 minute ML system design interview. Understanding the timing helps you allocate your energy correctly.

Minutes 0-3: Problem Statement

The interviewer gives you an open-ended prompt. It is usually one or two sentences. Examples: Design a system to detect harmful content on our platform. Design a recommendation system for our marketplace. Design an ad click prediction model.

The interviewer will not give you much detail on purpose. They want to see if you can navigate ambiguity. Do not panic at the vagueness -- it is intentional.

Minutes 3-10: Clarification and Scoping

This is where you ask questions to narrow the problem. You should have 5-8 prepared clarifying questions in your mental toolkit. Here is what great candidates sound like during this phase:

Before I start designing, I want to make sure I understand the scope. When you say harmful content, are we talking about hate speech, violence, spam, misinformation, or all of the above? And are we focusing on text content, images, videos, or multi-modal?

What is the scale we are designing for? Roughly how many pieces of content are uploaded per day? And what is our latency requirement -- do we need to classify content before it is shown to any user, or is some delay acceptable?

What does success look like from a business perspective? Are we optimizing to minimize the amount of harmful content that users see, or are we also trying to minimize false positives where legitimate content gets removed?

Minutes 10-40: Solution Design

This is the core of the interview. You walk through your approach end-to-end, covering data, features, model, training, evaluation, serving, and monitoring. You should be drawing diagrams throughout this section. A good ML system design diagram shows the data pipeline, feature computation, model training loop, serving infrastructure, and monitoring system.

During this phase, aim to spend roughly:

5 minutes on data sources and labeling strategy

5 minutes on feature engineering

8 minutes on model architecture (baseline to complex progression)

5 minutes on training approach and evaluation metrics

5 minutes on serving and deployment

2 minutes on monitoring

Minutes 40-55: Deep Dive

The interviewer picks one or two areas to probe deeper. This is where they test your depth. Common deep dive areas include: How would you handle class imbalance in this problem? Walk me through exactly how you would set up the A/B test. What specific features would you engineer for this use case? How would you detect model drift and what would trigger retraining? What happens when the model is wrong and what is the user experience?

You cannot predict which area they will probe, so you need reasonable depth across all areas. However, if the interviewer specializes in infrastructure, they will likely probe serving and monitoring. If they are a research scientist, they will probe model architecture and evaluation.

Minutes 55-60: Questions for Interviewer

Have 2-3 genuine questions ready. Good questions include asking about the team ML infrastructure, their biggest challenges in production ML, or how they approach A/B testing.

Key characteristics to remember:

There is no single right answer. Two candidates can propose completely different architectures and both get strong hires.

You are expected to drive the conversation. The interviewer wants to see you lead, not wait for prompts.

Drawing diagrams is expected and helpful, whether on a whiteboard or virtual drawing tool.

You are evaluated on breadth and depth -- can you cover the full pipeline AND go deep when asked?

Silence is bad. If you need to think, say Let me think about this for a moment rather than going quiet for 30 seconds.

What Each Level Is Expected to Demonstrate

Companies calibrate their expectations based on your level. Understanding what is expected at your level helps you allocate your preparation time.

L3 / Junior ML Engineer (0-2 years experience)

Demonstrate understanding of the basic ML pipeline: data, model, training, evaluation

Frame the problem correctly as an ML task

Propose a reasonable model choice with basic justification

Show awareness that serving and monitoring exist, even if you cannot go deep

Handle basic follow-up questions about your choices

It is okay to not know production infrastructure deeply

L4 / Mid-Level ML Engineer (2-5 years experience)

All of the above, plus:

Strong data thinking -- discuss data sources, labeling strategies, and feature engineering in detail

Show iterative model development (baseline to complex) with clear justification at each step

Discuss offline and online evaluation with specific metrics

Show awareness of training-serving skew and how to prevent it

Discuss deployment strategies (A/B testing, canary rollouts)

Handle most follow-up questions confidently

L5 / Senior ML Engineer (5-8 years experience)

All of the above, plus:

Crisp problem scoping that demonstrates you have done this many times

Deep data pipeline discussion -- you should be able to design the feature engineering pipeline in detail

Propose multi-stage systems where appropriate (candidate generation, ranking, re-ranking)

Discuss trade-offs proactively without the interviewer prompting

Strong monitoring and maintenance discussion -- what dashboards would you build, what alerts would you set

Discuss how the system evolves over time (v1, v2, v3)

Handle curveball questions and pivot gracefully

L6+ / Staff ML Engineer (8+ years experience)

All of the above, plus:

System-level thinking -- how does this ML system interact with other systems in the company

Organizational considerations -- how many engineers does this require, how would you phase the project

Strategic trade-offs -- build vs. buy, invest in infrastructure vs. ship fast

Cross-functional awareness -- how does this affect product, policy, legal, trust and safety teams

Ability to identify the single most impactful thing to do first and justify why

Deep expertise in at least one area (infrastructure, model architecture, evaluation methodology)

Interview tip: If you are interviewing for L5+, the interviewer expects you to proactively discuss trade-offs, failure modes, and system evolution without being prompted. At L3-L4, it is fine to discuss these when asked.

Common Mistakes Candidates Make

After coaching hundreds of candidates, here are the patterns I see in people who struggle. I am including specific examples so you can recognize these patterns in your own practice.

Mistake 1: Jumping Straight to Model Architecture

The interviewer says design a recommendation system and the candidate immediately starts talking about collaborative filtering vs. content-based filtering. Stop. You have not even clarified what you are recommending, to whom, or what success looks like.

What this sounds like: So for recommendations, I would use a two-tower neural network with user embeddings and item embeddings trained with contrastive loss...

What it should sound like: Before I dive into the approach, I want to understand the problem space. What are we recommending -- products, content, people? What is the primary surface -- homepage, search results, email? And what does success look like -- clicks, purchases, time spent?

Mistake 2: Ignoring Data Entirely

Data is the foundation of every ML system. If you spend 40 minutes talking about models and 0 minutes talking about data collection, labeling, and feature engineering, you have missed the most important part.

What this sounds like: I would use a transformer model with self-attention layers and fine-tune it on our dataset...

What the interviewer is thinking: What dataset? Where does it come from? How is it labeled? What are the features? This person has never built a real ML system.

Mistake 3: Forgetting About Serving and Monitoring

Your model is useless if it cannot serve predictions at the required latency. And it is dangerous if nobody is monitoring it for drift. Production thinking separates senior candidates from junior ones.

What this looks like: The candidate finishes their model discussion and says and then we deploy it. That is it. No mention of serving infrastructure, latency requirements, A/B testing, monitoring, or what happens when things go wrong.

What a strong candidate says: For serving, I would deploy the model behind a prediction service with a p99 latency target of 50ms. I would use a canary deployment, routing 5 percent of traffic initially, monitoring prediction distributions and business metrics for a week before full rollout. I would set up alerts for feature drift using PSI and model performance degradation using daily AUC tracking.

Mistake 4: Not Quantifying Anything

We will use a large model -- how large? We need low latency -- how low? We will retrain periodically -- how often? Put numbers on things, even rough estimates.

Weak: The system needs to be fast.

Strong: Given that this is in the critical path of page load, we need p99 latency under 100ms. With a model of this size, that means we will likely need model quantization and should consider caching embeddings for frequent users.

Mistake 5: Treating It as a Monologue

The best interviews feel like a collaborative design session. Check in with your interviewer. Ask Does this direction make sense? or Should I go deeper here or move on? Some candidates talk for 20 minutes straight without pausing. The interviewer has feedback and hints to offer -- let them.

Mistake 6: Over-Engineering the First Version

Do not propose a multi-modal transformer ensemble with real-time feature stores as your starting point. Start simple. Show you understand iterative development -- baseline first, complexity later.

What this sounds like: For v1, I would build a multi-task learning model with shared bottom layers, task-specific towers, and a mixture of experts gating network...

What it should sound like: For v1, I would start with a simple logistic regression model using a handful of well-understood features -- user activity level, item popularity, and basic content category. This gives us a baseline to beat. For v2, I would move to gradient boosted trees to capture non-linear feature interactions. For v3, if we have enough data and engineering resources, I would consider a deep learning approach.

Mistake 7: Not Connecting ML Metrics to Business Metrics

Many candidates discuss AUC or F1 score but never explain what these mean for the actual product and business.

Weak: We would evaluate using AUC-ROC and aim for above 0.85.

Strong: We would evaluate using AUC-ROC offline -- I would aim for above 0.85 as a baseline. But the real success metric is the impact on user engagement. I would run an A/B test measuring daily active users, average session length, and 7-day retention. If our model improves DAU by 1 percent with no degradation in content diversity, I would consider it a success.

Mistake 8: Copying a System You Read About Without Understanding It

Some candidates memorize a specific architecture from a blog post or paper and try to reproduce it regardless of the problem. Interviewers can tell. They will ask why this approach and if you cannot explain the reasoning behind each decision, it falls apart.

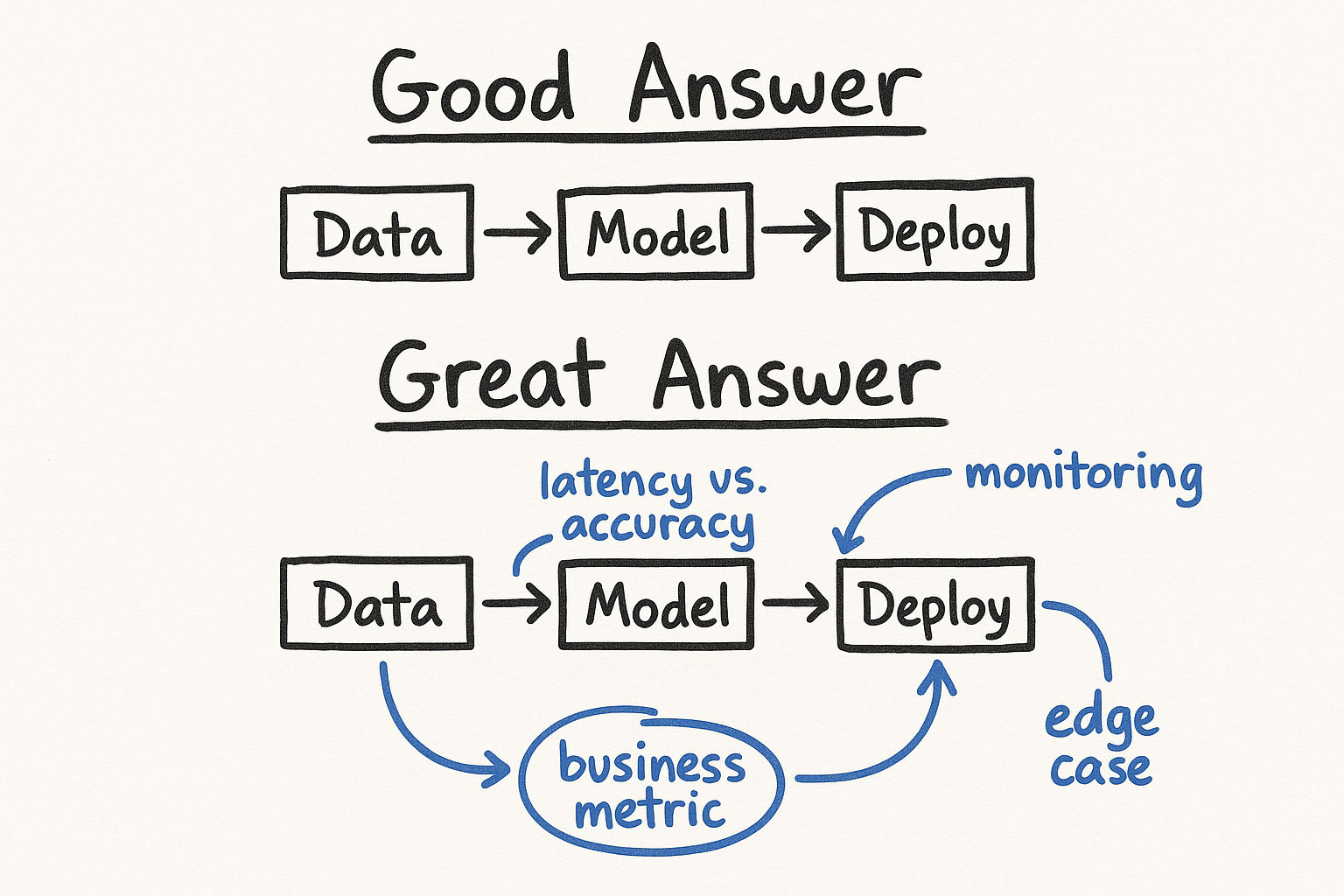

What Good Looks Like vs What Great Looks Like

A good answer:

Covers the main components: problem framing, data, model, training, evaluation, serving

Makes reasonable choices with some justification

Shows awareness of trade-offs

Responds well to interviewer follow-ups

Gets through most of the pipeline in the allotted time

A great answer:

Starts with crisp requirement clarification that shapes the entire design

Discusses data collection, labeling strategies, and feature engineering in depth

Proposes a progression -- start with a simple baseline, explain why, then iterate toward more complex approaches

Connects offline metrics to online business metrics (We optimize for AUC offline, but the real success metric is user retention)

Proactively addresses failure modes (What happens when the model is wrong? What is the fallback?)

Discusses monitoring, retraining triggers, and how the system evolves over time

Uses concrete numbers and estimates throughout (We have roughly 100M users, 10K new items per day, need p99 latency under 50ms)

Draws clear, well-organized diagrams that the interviewer can follow

Makes the interviewer feel like they are talking to someone who has actually built and shipped ML systems

Handles deep dive questions with specific, detailed answers rather than hand-waving

Interview tip: The single biggest differentiator between good and great candidates is data thinking. Great candidates spend 30-40 percent of their time on data -- where it comes from, how it is labeled, what features matter, and what can go wrong. This signals real-world experience.

Real Interview Questions by Company

To help you prepare, here are real and commonly reported ML system design questions organized by company. These are drawn from interview reports, preparation forums, and coaching experience.

Meta

Design the News Feed ranking system

Design a system to detect fake accounts

Design an ad click-through rate prediction model

Design a system to recommend Facebook Groups to users

Design a content integrity system to detect policy-violating posts

Design a notification relevance system (which notifications to send and when)

Design YouTube video recommendations

Design Google Smart Compose (autocomplete for email)

Design a query auto-suggestion system for Google Search

Design a harmful content detection system for YouTube

Design a system to predict which search results users will click

Amazon

Design the Customers who bought this also bought recommendation system

Design a system to detect fraudulent product reviews

Design a demand forecasting system for inventory planning

Design Alexa intent classification system

Uber / Lyft

Design an ETA prediction system for rides

Design a surge pricing model

Design a system to detect fraudulent rides

Design a driver-rider matching system

Netflix / Spotify

Design the Netflix homepage personalization system

Design Spotify Discover Weekly playlist generation

Design a system to select thumbnail images for Netflix shows

These questions share common patterns -- most involve ranking, recommendation, classification, or prediction. The framework in the next lesson will prepare you to handle all of them.

How to Use This Course

This course is structured to give you a complete framework for tackling any ML system design interview. Here is the roadmap:

The Framework (next lesson) -- A step-by-step approach you can apply to any ML system design problem

Data and Features -- Deep dive into data engineering and feature engineering for ML systems

Applied Problems -- Walk-throughs of the most commonly asked ML system design questions

Each lesson builds on the previous one. By the end, you will have a mental model that lets you confidently tackle any ML system design question thrown at you.

The framework in the next lesson is the single most important thing in this course. Master it, and you will have a structured approach that works for any problem. Let us get into it.

Key Takeaways

ML system design interviews test end-to-end ML thinking, not just model knowledge

They differ from both traditional system design and ML coding interviews -- know the differences in a detailed comparison

There is no single right answer -- interviewers evaluate your reasoning process

The biggest mistakes are jumping to models too fast, ignoring data, and not quantifying your decisions

Great candidates show iterative thinking: baseline to simple to complex

Data thinking is the number one differentiator between good and great candidates

Every major tech company with ML products now asks these questions

Level expectations matter -- L3 needs basic pipeline coverage, L5+ needs proactive trade-off discussion and system evolution thinking

Research the specific company ML products and read their engineering blog before your interview

Practice with a timer -- knowing how to allocate your 45-60 minutes is as important as knowing the content